SEO crawl budget is crucial for ensuring search engines efficiently index your website's most important pages. Optimizing this budget can lead to better visibility and faster updates in search results. At Keyword Metrics, we’re here to learn more about this topic in depth.

What is SEO Crawl Budget?

SEO crawl budget refers to the number of pages on your website that search engine bots, like Googlebot, can and will crawl within a given timeframe. Think of it as a time or resource allocation that search engines use to decide how much of your website they’ll explore.

Optimizing your crawl budget ensures that critical pages are crawled frequently, leading to better indexing and improved SEO performance.

How SEO Crawl Budget Works

Search engines allocate crawl budgets based on two factors: crawl rate and crawl demand.

- Crawl Rate: This is the number of requests a search engine bot makes to your site in a set period. It depends on your server’s capacity to handle requests without slowing down.

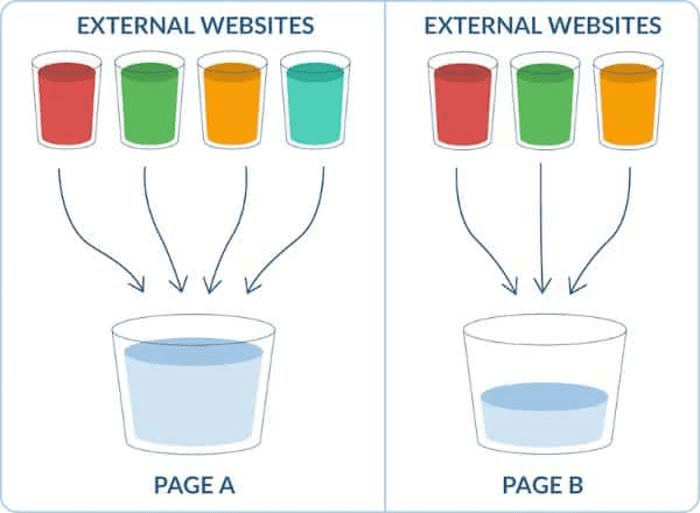

- Crawl Demand: This depends on the popularity and freshness of your content. Pages with high traffic or recent updates are crawled more often.

Key Processes

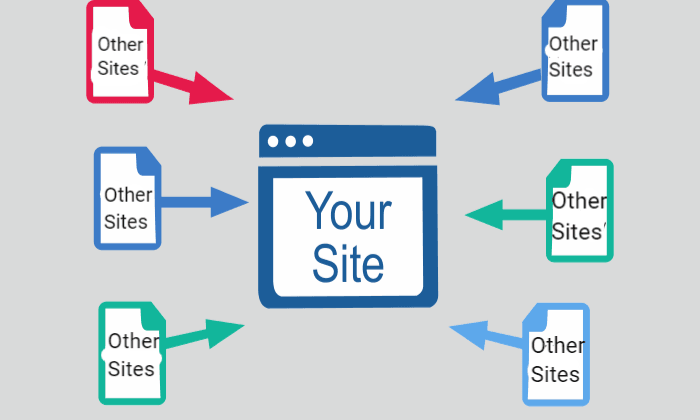

- Discovery: Search engine bots find new pages or changes on your site through internal and external links.

- Crawling: Bots visit your pages and follow links to discover new content.

- Indexing: Relevant content from crawled pages is added to the search engine's index.

Here’s Google’s guide to managing your crawl budget

Why Optimizing SEO Crawl Budget Is Important

Large Websites

For websites with thousands or millions of pages (e.g., e-commerce platforms), an optimized crawl budget ensures that essential pages are crawled and indexed promptly.

Faster Updates in Search Results

If you frequently update content (e.g., blogs or news), a good crawl budget strategy ensures search engines detect these changes quickly.

Detecting Technical Issues

An inefficient crawl budget might mean bots waste time crawling unimportant pages, leaving critical content undiscovered. Issues like duplicate pages, broken links, or bloated sitemaps can hinder crawling efficiency.

Pro Tips for Using SEO Crawl Budget Effectively

Clean Up Duplicate Content

Duplicate content wastes your crawl budget. Use canonical tags to consolidate duplicate pages or remove unnecessary duplicates altogether.

Tool Tip: Tools like Screaming Frog can help you identify duplicate content.

Optimize Your Robots.txt File

Block search engine bots from crawling non-essential pages, such as admin panels or filters, using the robots.txt file.

Example:

Disallow: /wp-admin/

Disallow: /cart/

Prioritize High-Value Pages

Focus the crawl budget on pages that drive traffic, like your best-performing blog posts, category pages, or top-selling products.

Actionable Tip: Keep your XML sitemap updated with only the most important URLs.

Monitor and Fix Crawl Errors

Use Google Search Console to identify and fix issues like 404 errors, broken links, and server errors that can waste crawl resources.

Boost Internal Linking

Strategic internal linking helps search engine bots efficiently navigate your site. Ensure every important page is linked to from other parts of your site.

Tools to Manage and Optimize SEO Crawl Budget

To effectively manage and optimize your crawl budget, several tools can help you monitor, identify issues, and prioritize key pages for crawling. Here are some popular tools you can use:

1. Google Search Console

Google Search Console is a free tool that provides in-depth insights into how Google crawls and indexes your website. It helps you monitor crawl errors, page indexing status, and overall crawl activity.

Key Features:

- Crawl Stats Report: See how much of your website is being crawled.

- Coverage Report: Identify pages that might be excluded from the index due to errors or issues.

- URL Inspection Tool: Check if a page has been crawled and indexed correctly.

2. Screaming Frog SEO Spider

Screaming Frog is a powerful website crawler that lets you analyze how search engines crawl your website. It helps identify issues like broken links, duplicate content, and other technical SEO problems that could affect your crawl budget.

Key Features:

- Crawls your entire website and identifies potential SEO issues.

- Generates an XML sitemap for important pages to focus on.

- Finds duplicate content, helping you optimize your crawl budget by removing unnecessary pages.

3. Ahrefs

Ahrefs is a comprehensive SEO toolset that includes a site audit feature to monitor crawlability. It allows you to track crawl errors and see which pages are getting the most backlinks, helping you prioritize high-value pages for crawlers.

Key Features:

- Site Audit Tool: Provides a crawlability overview and identifies crawl-related issues.

- Backlink Analysis: Helps prioritize high-value pages by showing backlink data.

Practical Example of SEO Crawl Budget Optimization

Imagine an e-commerce website with:

- 10,000 products.

- Hundreds of filters for color, size, and brand.

If search engines crawl every filter combination, they may miss critical product pages. By:

- Blocking filter pages using robots.txt.

- Adding canonical tags to variant pages.

- Prioritizing the main product pages in the XML sitemap.

FAQs on SEO Crawl Budget

Q. What happens if my website exceeds its crawl budget?

A. When your site exceeds its crawl budget, search engines might skip crawling some pages, especially low-priority or less optimized ones. This can lead to outdated or unindexed content in search results.

Q. How can I check my site's crawl budget usage?

A. You can monitor crawl budget usage in Google Search Console under Settings the Crawl Stats report. It shows how often Googlebot crawls your site and highlights any crawling issues.

Q. Do small websites need to worry about crawl budgets?

A. Generally, small websites with fewer than a few hundred pages don't need to worry about crawl budgets. However, focusing on good technical SEO practices ensures efficient crawling regardless of site size.

Related Glossary Terms to Explore

- SEO Internal Linking: Discover how strategic internal linking helps search engines crawl and index your website more efficiently.

- XML Sitemap: Find out how an XML sitemap directs search engines to the most important pages on your site, improving crawl efficiency.

- Robots.txt: Learn how the robots.txt file helps control which pages search engine bots can and can’t crawl, optimizing your crawl budget.